Facebook has begun letting partners fact check photos and videos beyond news articles, and proactively review stories before Facebook asks them. Facebook is also now preemptively blocking the creation of millions of fake accounts per day. Facebook revealed this news on a conference call with journalists [Update: and later a blog post] about its efforts around election integrity that included Chief Security Officer Alex Stamos who’s reportedly leaving Facebook later this year but claims he’s still committed to the company.

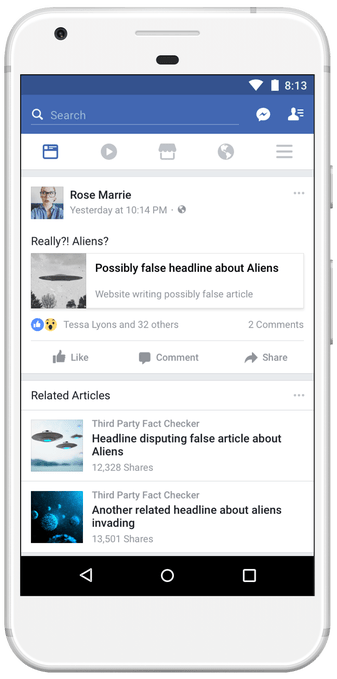

Articles flagged as false by Facebook’s fact checking partners have their reach reduced and display Related Articles showing perspectives from reputable news oulets below

Stamos outlined how Facebook is building ways to address fake identities, fake audiences grown illicitly or pumped up to make content appear more popular, acts of spreading false information, and false narratives that are intentionally deceptive and shape people’s views beyond the facts. “We’re trying to develop a systematic and comprehensive approach to tackle these challenges, and then to map that approach to the needs of each country or election” says Stamos.

Samidh Chakrabarti, Facebook’s product manager for civic engagement also explained that Facebook is now proactively looking for foreign-based Pages producing civic-related content inauthentically. It removes them from the platform if a manual review by the security team finds they violate terms of service.

“This proactive approach has allowed us to move more quickly and has become a really important way for us to prevent divisive or misleading memes from going viral” said Chakrabarti. Facebook first piloted this tool in the Alabama special election, but has now deployed it to protect Italian elections and will use it for the U.S. mid-term elections.

Meanwhile, advances in machine learning have allowed Facebook “to find more suspicious behaviors without assessing the content itself” to block millions of fake account creations per day “before they can do any harm”, says Chakrabarti.

Facebook implemented its first slew of election protections back in December 2016, including working with third-party fact checkers to flag articles as false. But those red flags were shown to entrench some people’s belief in false stories, leading Facebook to shift to showing Related Articles with perspectives from other reputable news outlets. As of yesterday, Facebook’s fact checking partners began reviewing suspicious photos and videos which can also spread false information. This could reduce the spread of false news image memes that live on Facebook and require no extra clicks to view, like doctored photos showing the Parkland school shooting survivor Emma González ripping up the constitution.

Normally, Facebook sends fact checkers stories that are being flagged by users and going viral. But now in countries like Italy and Mexico in anticipation of elections, Facebook has enabled fact checkers to proactively flag things because in some cases they can identify false stories that are spreading before Facebook’s own systems. “To reduce latency in advance of elections, we wanted to ensure we gave fact checkers that ability” says Facebook’s News Feed product manager Tessa Lyons.

A photo of Parkland shooting survivor Emma González ripping up a shooting range target was falsly doctored to show her ripping up the constitution. Photo fact checking could help Facebook prevent the false image from going viral. [Image via CNN]

from Social – TechCrunch https://ift.tt/2usDecF Read More Detail!

0 comments:

Post a Comment